NVIDIA H200 NVL 141GB PCIe Accelerator for HPE

NVIDIA H200 NVL 141GB PCIe Accelerator for HPE

NVIDIA H200 NVL 141GB PCIe Accelerator for HPE

- NVIDIA H200 NVL is ideal for air-cooled enterprise rack designs that require flexible configurations.

- With up to four GPUs connected by NVIDIA NVLink™ and a 1.5x memory increase, LLM inference can be accelerated up to 1.7x and HPC up to 1.3x on H200 NVL compared to H100 NVL.

- NVIDIA H200 NVL comes with a five-year NVIDIA AI Enterprise subscription and simplifies the way you build an enterprise AI-ready platform.

- Third generation NVLink doubles the GPUGPU direct bandwidth.

- Third-generation RT cores for speeding up rendering workloads.

- NVIDIA confidential computing is a built-in security feature of Hopper-based accelerators.

Overview

Do you require higher performance for artificial intelligence (AI) training and inference, high-performance computing (HPC) or graphics? NVIDIA® Accelerators for HPE help solve the world’s most important scientific, industrial, and business challenges with AI and HPC. Visualize complex content to create cutting-edge products, tell immersive stories, and reimagine cities of the future. Extract new insights from massive datasets. Hewlett Packard Enterprise servers with NVIDIA accelerators are designed for the age of elastic computing, providing unmatched acceleration at every scale.

Develop and Deploy AI at Any Scale

Build new AI models with supervised or unsupervised training for generative AI, computer vision, large language models (LLM), scientific discovery, and financial market modeling with NVIDIA accelerators and HPE Cray systems. Get real-time inference for computer vision, natural language processing, fraud detection, predictive maintenance, and medical imaging with NVIDIA Accelerators and HPE ProLiant Compute Servers for HPE improve computational performance, dramatically reducing the completion time for parallel tasks, offering quicker time to solutions.

NVIDIA Qualified and NVIDIA Certified HPE Servers

NVIDIA Accelerators for HPE undergo thermal, mechanical, power, and signal integrity qualification to validate that the accelerator is fully functional in the server. NVIDIA Qualified configurations are supported for production use. NVIDIA-Certified HPE servers are tested to validate both multi-GPU and multinode performance for a diverse set of workloads to deliver excellent application performance, manageability, security, and scalability.

HPE Integrated Lights-Out (iLO) Management

HPE iLO server management software enables you to securely configure, monitor, and update your NVIDIA Accelerators for HPE seamlessly, from anywhere in the world. HPE iLO is an embedded technology that helps simplify server and accelerator set up, health monitoring, power, and thermal control, utilizing HPE's Silicon Root of Trust.

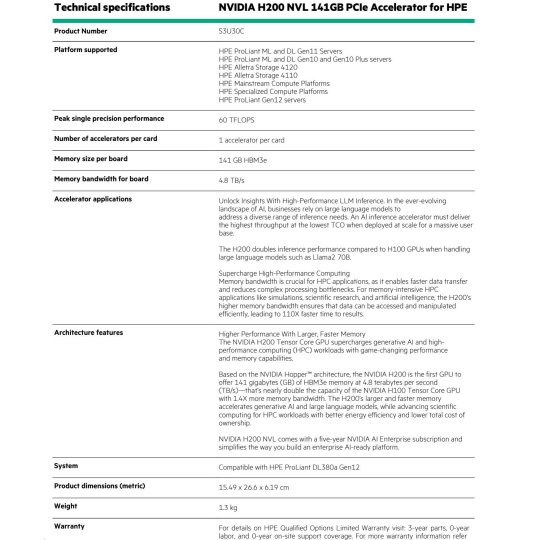

Technical specifications NVIDIA H200 NVL 141GB PCIe Accelerator for HPE

Product Number S3U30C

Platform supported

HPE ProLiant ML and DL Gen11 Servers HPE ProLiant ML and DL Gen10 and Gen10 Plus servers HPE Alletra Storage 4120 HPE Alletra Storage 4110 HPE Mainstream Compute Platforms HPE Specialized Compute Platforms HPE ProLiant Gen12 servers

Peak single precision performance 60 TFLOPS

Number of accelerators per card 1 accelerator per card Memory size per board 141 GB HBM3e

Memory bandwidth for board 4.8 TB/s

Accelerator applications

Unlock Insights With High-Performance LLM Inference. In the ever-evolving landscape of AI, businesses rely on large language models to address a diverse range of inference needs. An AI inference accelerator must deliver the highest throughput at the lowest TCO when deployed at scale for a massive user base. The H200 doubles inference performance compared to H100 GPUs when handling large language models such as Llama2 70B. Supercharge High-Performance Computing Memory bandwidth is crucial for HPC applications, as it enables faster data transfer and reduces complex processing bottlenecks. For memory-intensive HPC applications like simulations, scientific research, and artificial intelligence, the H200’s higher memory bandwidth ensures that data can be accessed and manipulated efficiently, leading to 110X faster time to results.

Architecture features

Higher Performance With Larger, Faster Memory The NVIDIA H200 Tensor Core GPU supercharges generative AI and highperformance computing (HPC) workloads with game-changing performance and memory capabilities. Based on the NVIDIA Hopper™ architecture, the NVIDIA H200 is the first GPU to offer 141 gigabytes (GB) of HBM3e memory at 4.8 terabytes per second (TB/s)—that’s nearly double the capacity of the NVIDIA H100 Tensor Core GPU with 1.4X more memory bandwidth. The H200’s larger and faster memory accelerates generative AI and large language models, while advancing scientific computing for HPC workloads with better energy efficiency and lower total cost of ownership. NVIDIA H200 NVL comes with a five-year NVIDIA AI Enterprise subscription and simplifies the way you build an enterprise AI-ready platform.

System Compatible with HPE ProLiant DL380a Gen12

Product dimensions (metric) 15.49 x 26.6 x 6.19 cm

Weight 1.3 kg Warranty For details on HPE Qualified Options Limited

Warranty visit: 3-year parts, 0-year labor, and 0-year on-site support coverage.

.